The DMTA Loop

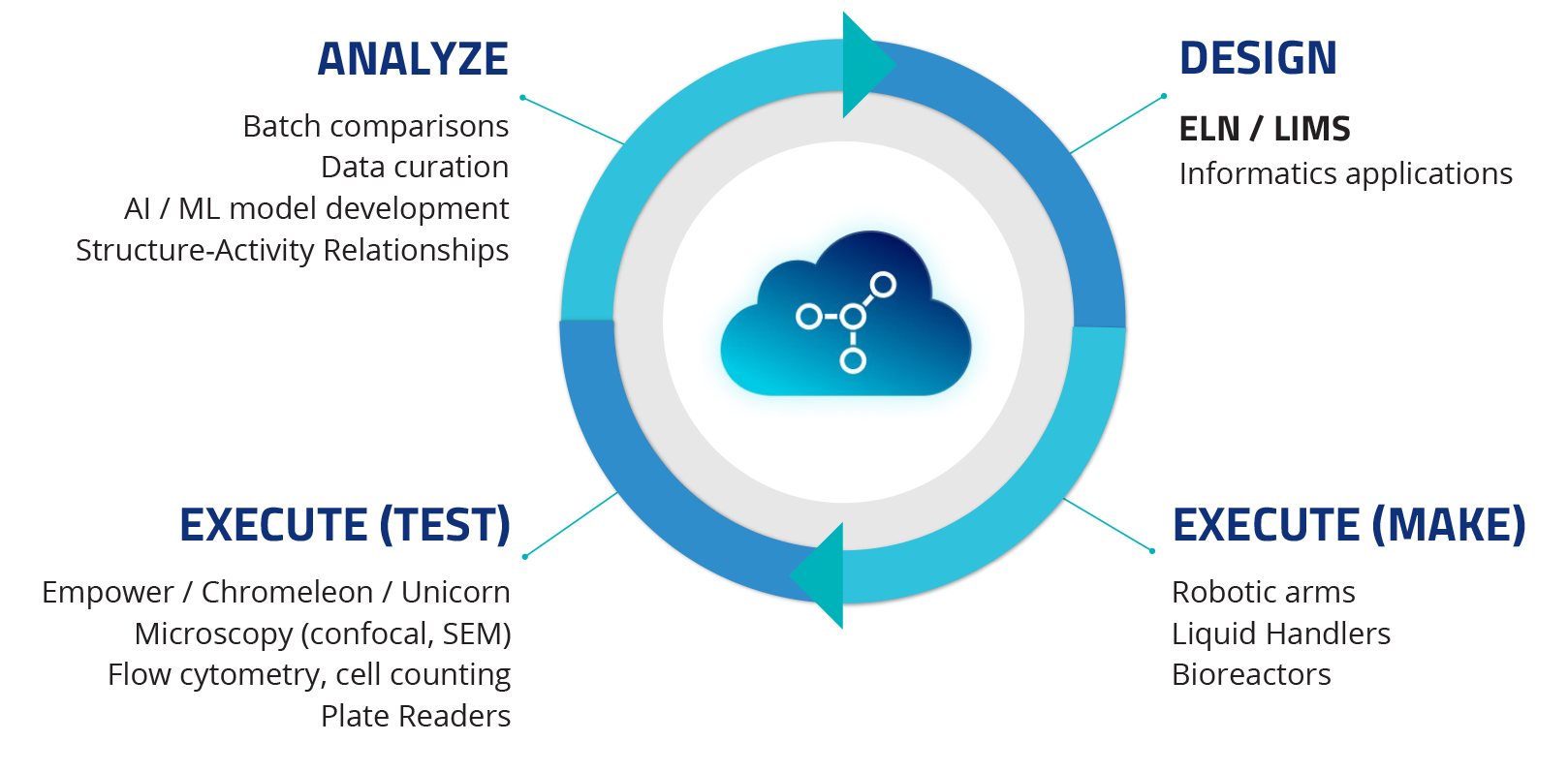

Perhaps you learned the scientific method in high school: define a problem, advance a hypothesis, conduct an experiment, analyze data, and draw conclusions. Industrial scientists across the scientific and technological landscape have streamlined this process into the Design-Make-Test-Analyze (DMTA) loop. You start with a design, run experiments, collect and test samples, then analyze the data and use the insights derived to iterate on the next design. Electronic lab notebooks (ELNs) and Lab information management systems (LIMS) are arguably two of the most crucial tools used in the Design and Analyze phase of the DMTA cycle.

Let’s craft an analogy — an ELN is like a storybook — with a distinct beginning, middle, and end. Often, depending on your domain, we start at the end with a picture of what you hope to achieve. For chemists, it may be a reaction with a predicted end product. For biologists, perhaps it’s a specific animal model of disease or a protein structure. For pharmacologists, it’s a target assay or endpoint.

As ELNs are experiment oriented, they focus on experiment results and functions much like an “Evernote for science labs.” ELN formats are more structured and flexible, allowing the user to dictate how to structure their work and results.

A LIMS, on the other hand, is “just the facts, ma’am” – it’s sample-oriented and focuses on items such as barcode tracking, workflow management, and handling analytical results performed on groups or lists of samples. LIMS typically enforce a more rigid structure that is less free-form than that of an ELN.

The Data Ecosystem: Data Sources and Targets

Let’s now zoom out and consider the informatics landscape in biopharma. Few would disagree that new challenges arise as data complexity increases and systems fragment. Workflows are often held together with brittle, point-to-point integrations. Labs and even individual scientists produce heterogeneous data streams in a multitude of vendor formats. To introduce some order into this otherwise chaotic data landscape, we here at TetraScience like to apply a mental model where each workflow moves primary data from data sources to data targets.

Data sources are where valuable scientific data are produced (or entered) by scientists and generated by lab instruments. Some common data sources include:

- Scientific instruments and control software, such as chromatography data system (CDS), lab robotics automation system

- Informatics applications like electronic laboratory notebooks (ELNs) and laboratory information management systems (LIMS)

- Outsourced partners like CROs (research) and CDMOs (development & manufacturing)

Data targets, on the other hand, are systems that consume data to deliver insights and conclusions or reports. There are roughly two types of data targets:

- Data science-oriented applications and tools including visualization and analytics tools, data science tools, AI and machine learning tools such as TensorFlow and platforms such as those provided by AWS, Google, and Microsoft

- Lab informatics systems, including lab information management systems (LIMS), manufacturing execution system (MES), electronic lab notebooks (ELNs), and instrument control software, such as chromatography data system (CDS) and lab robotics automation systems. Interestingly, these data targets are also treated as data sources in certain workflows

The Achilles’ Heel of ELN and LIMS

Biopharmas make significant investments when adopting ELN/LIMS and it pays dividends to make it as seamless as possible for scientists to use these solutions.

What is one major challenge impeding scientific ROI using ELN/LIMS? The tedious, manual busy work of capturing experimental workflow from, and getting data into, the ELN/LIMS. For example:

- Scientists must manually transcribe design parameters or sample information into the instruments or instrument control software

- Scientists need to manually enter experimental data into the ELN/LIMS and often they struggle with binary data, unstructured, or semi-structured data

When requiring compliance or high data integrity, these challenges become even more pronounced, often leading to a second or even a third scientist review. When high-throughput experimentation (HTE) is involved, or large files are produced, this problem becomes unmanageable due to the sheer size of the data and the number of operations needed. Imagine spending one hour to run your assay and then two or three more to collect, move, transcribe, upload, and review the same data!

In summary, major pain points include moving information from design to execution and moving data back from execution to analysis in the DMTA cycle. Put another way, moving the data from the data sources to the data targets.

Tetra Data Platform (TDP) along with Tetra Data connects ELN/LIMS from across biopharma’s digital lab stack to enable a true “lab of the future” or “digital lab” experience. Scientists design experiments in ELN/LIMS, bring their experimental design or sample list to the lab for execution, and then get the data back into the ELN/LIMS without worrying about manually moving and transforming the data. Biopharma organizations often use multiple ELN/LIMS, depending on the department or data streams. TDP serves as the data exchange layer for these multiple ELN/LIMS with no additional work needed from the scientists.

TDP takes raw, primary scientific data and uses it to create Tetra Data, which is compliant, harmonized, liquid, and actionable to facilitate the Design-Make-Test-Analyze (DMTA) loop at the core of every scientific process.

How Tetra Data Connects Data Sources to Targets

Tetra Data provides a model of essential data properties that enable organizations to connect their data sources and targets and manage their scientific data. These properties are:

- Compliant with GxP and other regulations

- Harmonized across data sources and vendor formats

- Liquid to seamlessly flow between data sources and targets across different instruments, systems, and software

- Actionable to enable analytics, visualizations, and AI/ML

With Tetra Data, scientific data are harmonized across different vendor formats for various lab instruments and informatics applications into a common format and stored centrally in the cloud. From there, liquid Tetra Data flows seamlessly to any targets. Tetra Data makes it painless to connect ELN/LIMS sources and targets and provide data across all phases of the DMTA loop.

ELN/LIMS as Data Source: From Design to Execution

Just like almost everything else in life, execution starts with planning and design. The experimental work starts with assay design or a request to measure a list of samples (sometimes, called batch as well). Using TDP, scientists can automatically send experimental designs, transfer batch information, or a sample list to the instrument control software, such as Waters Empower, ThermoFisher Chromeleon, or Shimadzu.

ELN/LIMS as Data Target: From Execute to Analyze

Once the scientist finishes execution, TDP automatically collects the data and harmonizes, validates, and then pushes the data into ELN/LIMS. Scientists can also search for specific data, and retrieve them from TDP, and import into ELN/LIMS to start analysis.

Bringing Together End-to-End Data Exchange and Management

Improve productivity and ensure data integrity. TDP as the data (exchange) layer between Execute and Analyze eliminates the need to manually input design parameters and sample identifiers directly into the instrument control software or enter data back into ELN/LIMS. This automation saves scientists time and avoids compliance issues related to manual transcription, eliminating tedious multiple scientist reviews.

Make data actionable. Automated integrations allow ALL scientific data to be collected, stored, and queried through TDP. Data science tools can leverage experimental data within ELN/LIMS and rich data sets to provide insights to accelerate innovation.

Accelerate time to value. TDP connects all data sources and targets while also managing and providing access to FAIR data. Biopharma organizations now have a single solution where many previously existed, thus simplifying complexity and reducing time needed to implement and deliver value.

Going Beyond ELN/LIMS with TDP

One question you may be thinking is “don’t ELN or LIMS connect directly… isn’t that more efficient?”

While it is possible to create a point-to-point connection between ELN and LIMS, this approach comes with many limitations when compared to a scientific data cloud, such as TDP, including:

- Connecting two instruments or informatics applications, a point-to-point integration and exchange of data may work fine, but what happens when a third, fourth, or fifth data source or target is needed? Point-to-point integrations simply aren’t scalable and each additional integration adds to your organization’s development and maintenance costs and frequently lead to becoming reliant on system integrations and professional services.

- Connecting ELN and LIMS applications allows for the capture and exchange of data, but doesn’t provide access to centralized, FAIR scientific data. Scientists must still go through the ELN and LIMS interface to access the data from applications. This ELN/LIMS connection essentially becomes another data silo. TDP collects, harmonizes, and provides access to ALL scientific data in a single place.

- Transferring data between ELN and LIMS only represents one use case for scientific data. Most biopharma companies wish to gain insights from their data that can guide scientific research direction, accelerate discovery, and increase business productivity. Advanced analytics and visualizations require access to data that has been prepared and centralized to provide this level of value to the organization.

- Supporting only file capture and tabular data import.. ELN/LIMS are not built to handle sophisticated integrations that require software interfaces (Waters Empower), IoT-based (blood gas analyzer) or semi-structured data sources (plate reader exports), or binary data (mass Spectrometry files).

In short, while you can directly connect ELN/LIMS, this approach is less efficient than it seems on the surface. Complexities arise when you need to do more with your data than simply connect LIMS and ELN applications. Most biopharma organizations quickly discover that point-to-point integrations fall far short of the integration flexibility and centralized data management of TDP, leading to slowed cycle times around the DMTA loop - exactly the opposite of what lab automation and digitalization are meant to do!

To learn more about how to automate data workflows with your ELN or LIMS, check out this video.